Re/drawing interactions: an EM/CA video tools development workshop

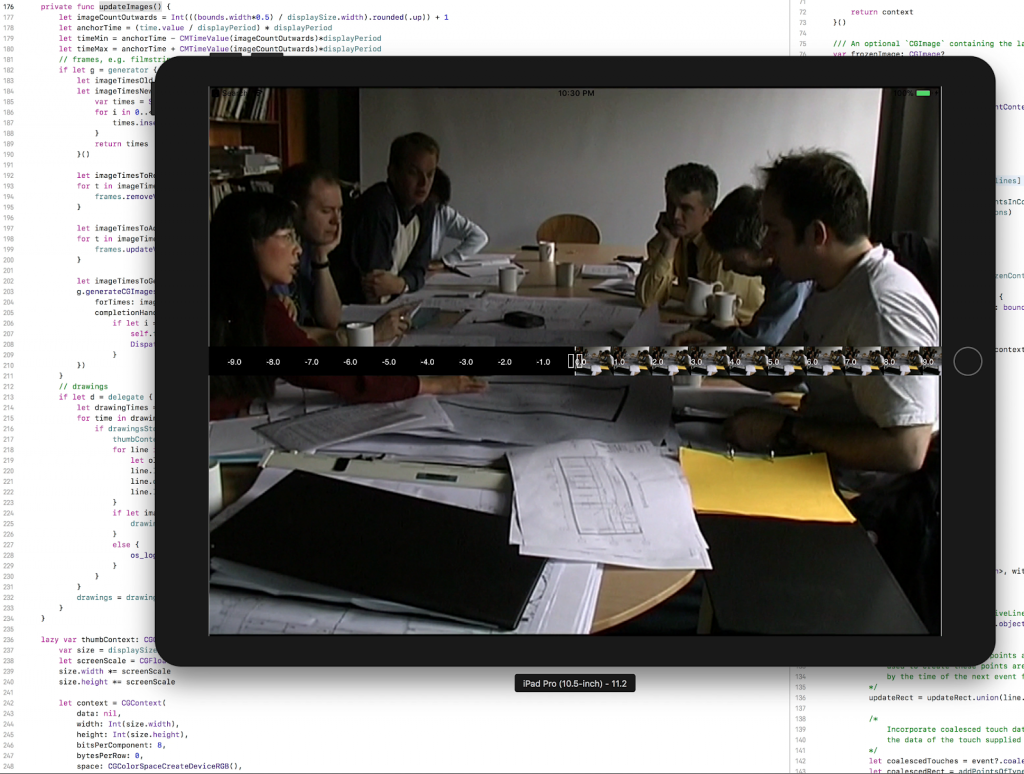

As part of the Drawing Interactions project (see report), Pat Healey, Toby Harris, Claude Heath, and Sophie Skach and I ran a workshop at New Developments in Ethnomethodology in London (March 2018) to teach interaction analysts how and why to draw.

Here’s the workshop abstract:

Ethnomethodological and conversation analytic (EM/CA) studies often use video software for transcription, analysis and presentation, but no such tools are designed specifically for EM/CA. There are, however, many software tools commonly used to support EM/CA research processes (Hepburn & Bolden, 2016 pp. 152-169; Heath, Hindmarsh & Luff 2010 pp. 109-132), all of which adopt one of two major paradigms. On the one hand, horizontal scrolling timeline partition-editors such as ELAN (2017) facilitate the annotation of multiple ‘tiers’ of simultaneous activities. On the other hand, vertical ‘lists of turns’ editors such as CLAN (Macwhinney, 1992) facilitate a digital, media-synced version of Jefferson’s representations of turn-by-turn talk. However, these tools and paradigms were primarily designed to support forms of coding and computational analysis in interaction research that have been anathema to EM/CA approaches (Schegloff 1993). Their assumptions about how video recordings are processed, analyzed and rendered as data may have significant but unexamined consequences for EM/CA research. This 2.5 hour workshop will reflect on the praxeology of video analysis by running a series of activities that involve sharing and discussing diverse EM/CA methods of working with video. Attendees are invited to bring a video they have worked up from ‘raw data’ to publication, which we will re-analyze live using methods drawn from traditions of life drawing and still life. A small development team will build a series of paper and software prototypes over the course of the workshop week, aiming to put participants’ ideas and suggestions into practice. Overall, the workshop aims to inform the ongoing development of software tools designed reflexively to explore, support, and question the ways we use video and software tools in EM/CA research.

References

ELAN (Version 5.0.0-beta) [Computer software]. (2017, April 18). Nijmegen: Max Planck Institute for Psycholinguistics. Retrieved from https://tla.mpi.nl/tools/tla-tools/elan/

Heath, C., Hindmarsh, J., & Luff, P. (2010). Video in qualitative research: analysing social interaction in everyday life. London: Sage Publications.

Hepburn, A., & Bolden, G. B. (2017). Transcribing for social research. London: Sage.

MacWhinney, B. (1992). The CHILDES project: Tools for analyzing talk. Child Language Teaching and Therapy, (2000).

Schegloff, E. A. (1993). Reflections on Quantification in the Study of Conversation. Research on Language & Social Interaction, 26(1), 99–128.

Re/drawing interactions: an EM/CA video tools development workshop Read More »