How do people with dementia and their carers use Alexa-type devices in the home?

We have a a fully funded PhD position available (deadline 6th March 2020) to work with myself, Prof. Charles Antaki and Prof. Liz Peel in collaboration with The Alzheimer’s Society to explore the opportunities, risks and wider issues surrounding the use of AI-based voice technologies such as the Amazon Echo and home automation systems in the lives of people with dementia.

Voice technologies are often marketed as enabling people’s independence. For example, Amazon’s “Sharing is Caring” advert for its AI-based voice assistant Alexa shows an elderly man being taught to use the ‘remind me’ function of an Amazon Echo smart speaker by his young carer. But how accessible are these technologies in practice? How are people with dementia and carers using them in creative ways to solve everyday access issues? And what are the implications for policy given the consent and privacy issues?

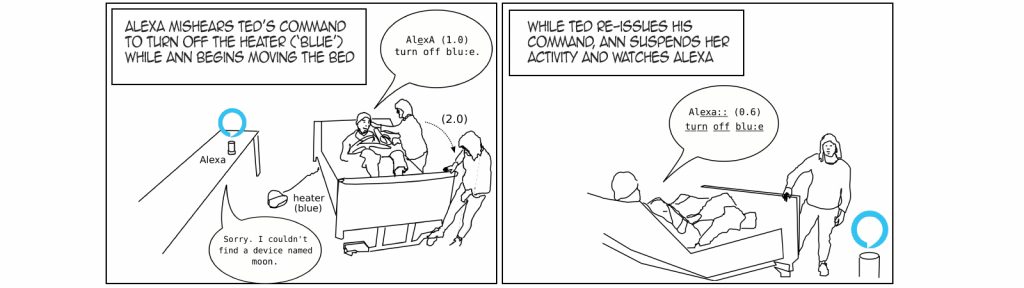

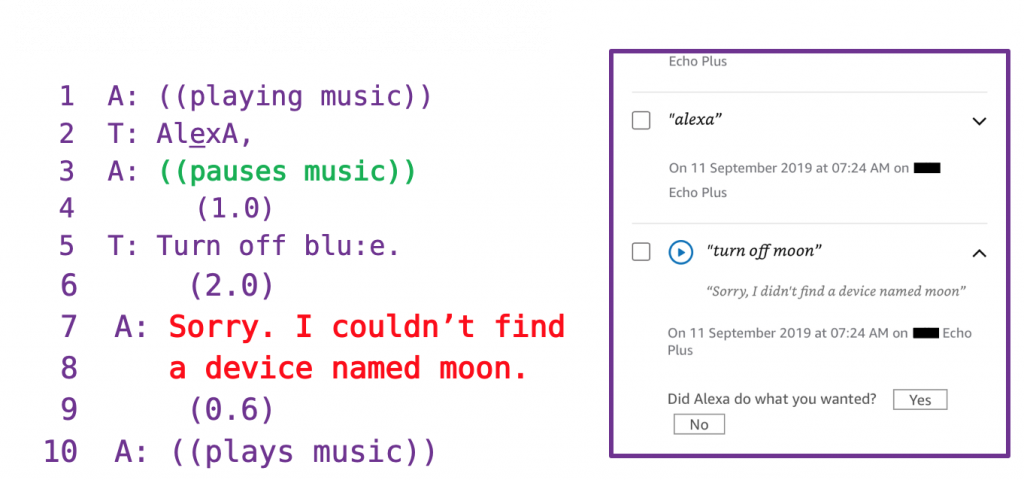

The project will combine micro and macro-levels of analysis and research. On the micro-level, the successful applicant will be trained and/or supported to use video analysis to study how people with dementia collaborate with their assistants to adapt and use voice technologies to solve everyday access issues. On the macro-level, the project will involve working on larger scale operations and policy issues with Ian Mcreath and Hannah Brayford at The Alzheimer’s Society and within the wider Dementia Choices Action Network (#DCAN).

Through this collaboration, the research will influence how new technologies are used, interpreted and integrated into personalised care planning across health, social care and voluntary, community and social enterprise sectors.

The deadline is the 6th March 2020 (see the job ad for application details). All you need to submit for a first round application is a CV and a short form, with a brief personal statement. We welcome applications from people from all backgrounds and levels of research experience (training in specific research methods will be provided where necessary). We especially welcome applications from people with first hand experience of disability and dementia, or with experience of working as a formal or informal carer/personal assistant.

This research will form part of the Adept at Adaptation project, looking at how disabled people adapt consumer AI-based voice technologies to support their independence across a wide range of impairment groups and applied settings.

The successful applicant will be supported through the ESRC Midlands Doctoral Training Partnership, and will have access to a range of highly relevant supervision and training through the Centre for Research in Communication and Culture at Loughborough University.

Feel free to contact me on s.b.albert@lboro.ac.uk with any informal inquiries about the post.

How do people with dementia and their carers use Alexa-type devices in the home? Read More »