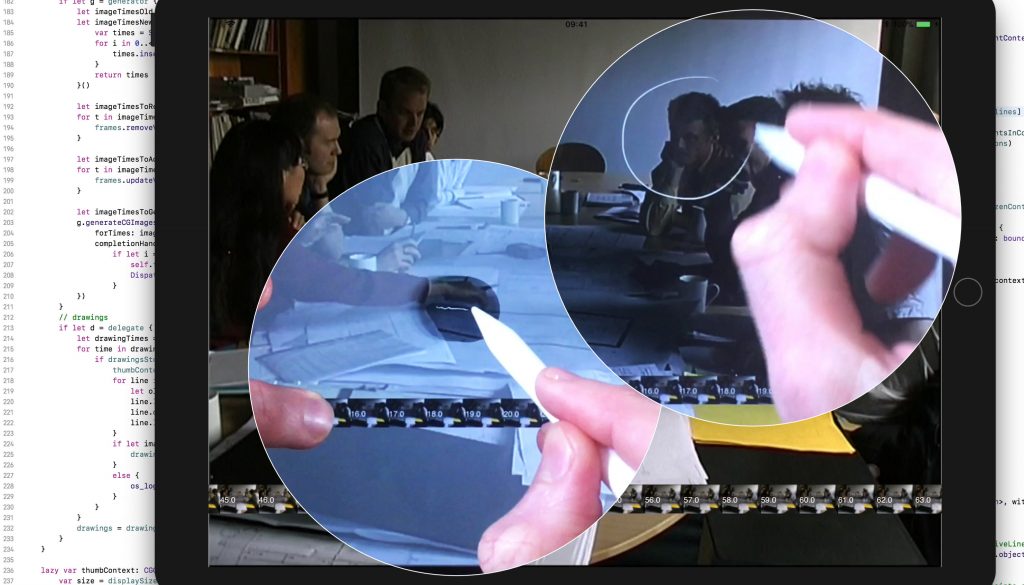

Collecting data from streaming cameras with youtube-dl

I’ve been fascinated by a live camera stream showing a UK street since the start of the lockdown on the 23rd March 2020 because it’s shown how pedestrians interpret the 2m physical distancing rule.

Some of the data from this camera was incorporated into a very nice ROLSI blog post by Eric Laurier, Magnus Hamann and Liz Stokoe that I helped with about the emergence of the ‘social swerve’.

I thought others might find it useful to read a quick how-to about grabbing video from live cameras – it’s a great way to get a quick and dirty bit of data to test a working hunch or do some rough analysis.

There are thousands of live cameras that stream to youtube, but it can be a bit cumbersome to capture more than a few seconds via more straightforward screen capture methods.

NB: before doing this for research purposes, check that doing so is compliant with relevant regional/institutional ethical guidelines.

Step 1: download and configure youtube-dl

Youtube-dl is a command line utility, which means you run it from the terminal window of your operating system of choice – it works fine on any Unix, on Windows or on Mac Os.

Don’t be intimidated if you’ve never used a command line before, you won’t have to do much beyond some copying and pasting.

I can’t do an installation how-to, but there are plenty online:

Mac:

Windows:

I’ll assume that if you’re a Unix user, you know how to do this.

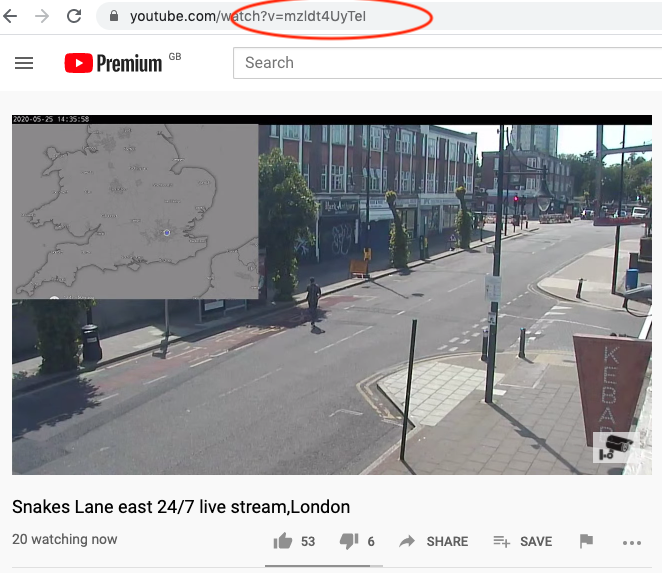

Step 2: copy and paste the video ID from the stream

Every Youtube video has a video ID that you can copy from the address bar of your browser. Here’s the one I used for the blog post mentioned above – which we’ve affectionately nicknamed the ‘kebab corpus’. The video ID is circled in red:

Step 3: use youtube-dl to begin gathering your video data

This bit is a little hacky – as in not really using the software as intended or documented, so I’ve created a short howto video. There might be better ways. If so, please let me know!

As I mention in that video – probably best not to leave youtube-dl running for too long on a stream as you might end up losing your video if something happens to interrupt the stream. I’ve captured up to half an hour at a time.

It’s possible to create scripts and automated actions for a variety of operating systems to do this all for you on a schedule – but if you need extensive video archives, I’d recommend contacting the owner of the stream to see if they can simply send you their high quality youtube archives.

Collecting data from streaming cameras with youtube-dl Read More »