What ‘counts’ as explanation in social interaction?

Saul Albert∗, Hendrik Buschmeier, Katharina Cyra, Christiane Even, Magnus Hamann, Jakub Mlynář, Hannah Pelikan, Martin Porcheron, Stuart Reeves, Philippe Sormani & Sylvaine Tuncer†

Citation: Albert, S., Buschmeier, H. Cyra, K., Even, C., Hamann, M., Licoppe, C., Mlynář, J., Pelikan, H., Porcheron, M., Reeves, S., Rudaz, D., Sormani, P., Tuncer, S. (2023, November 6-7). What ‘counts’ as an explanation in social interaction? 2nd TRR 318 Conference Measuring Understanding, University of Paderborn, Paderborn, Germany.

Background

Measuring explainability in explainable AI (X-AI) usually involves technical methods for evaluating and auditing automated decision-making processes to highlight and eliminate potential sources of bias. By contrast, human practices of explaining usually involve doing explanation as a social action (Miller, 2019). X-AI’s transparent machine learning models can help to explain the proprietary ‘black boxes’ often used by high-stakes decision support systems in legal, financial, or diagnostic contexts (Rudin, 2019). However, as Rohlfing et al. (2021) point out, effective explanations (however technically accurate they may be), always involve processes of co-construction and mutual comprehension. Explanations usually involve at least two parties: the system and the user interacting with the system at a particular point in time, and ongoing contributions from both explainer and explainee are required. Without accommodating action, X-AI models appear to offer context-free, one-size-fits-all technical solutions that may not satisfy users’ expectations as to what constitutes a proper explanation.

What counts as an explanation?

If we accept that explanations are not simply stand-alone statements of causal relation, it can be hard to identify what should ‘count’ as an explanation in interaction (Ingram, Andrews, and Pitt, 2019). Research into explanation in ordinary human conversation has shown that explanations can be achieved through various practices tied to the local context of production cf. Schegloff, 1997. Moreover, explanations do not just appear anywhere in an interaction, but they are recurrently produced as responsive actions to fit an interactional ‘slot’ where someone has been called to account for something (Antaki, 1996). Sometimes explanations may also be produced as ‘initial’ moves in a sequence of action. In such cases, they are often designed to anticipate resistance and deal with. e.g., the routine contingencies that people cite when refusing to comply with an instruction (Antaki and Kent, 2012). Explanations as actions also perform and ‘talk into being’ social and institutional relationships such as doctor/patient, or teacher/student (Heritage and Clayman, 2010). Explainable AI systems, in this sense, become ‘accountable’ or ’transparent’ through their social uses (Button, 2003; Ehsan et al., 2021). We draw on concepts of explanation from Ethnomethodology and Conversation Analysis (Garfinkel, 1967; Garfinkel, 2002; Sacks, Schegloff, and Jefferson, 1974), Discursive Psychology (Edwards and Potter, 1992; Wiggins, 2016), and cognate fields like Distributed Cognition (Hutchins, 1995), and Enactivism (Di Paolo, Cuffari, and De Jaegher, 2018) to outline an empirical approach to explanation as a context-sensitive situated social practice (Suchman, 1987).

Explanations as joint actions Shared understanding is co-constructed through the achievement of coordinated social action (see e.g., Clark, 1996; Linell, 2009; Goodwin, 2017). Necessary and sufficient explanations cannot, therefore, be predefined by AI designers. Instead, explanation may be achieved through the achievement of joint actions with an AI in a specific context. If a system displays its capabilities in ways that match users’ expectations, they may achieve explanation (as contingently shared understanding) for all present intents and purposes. Explanation in this sense can never be considered complete – it could always be elaborated (cf. Garfinkel, 1967, pp. 73–75). While similar situations would involve predictability and regularity, this concept of explanation requires that participants jointly establish the relevant criteria and form for sufficient explanation with reference to the tasks and present purposes at hand, such as formulations of examples (Lee and Mlynář, 2023).

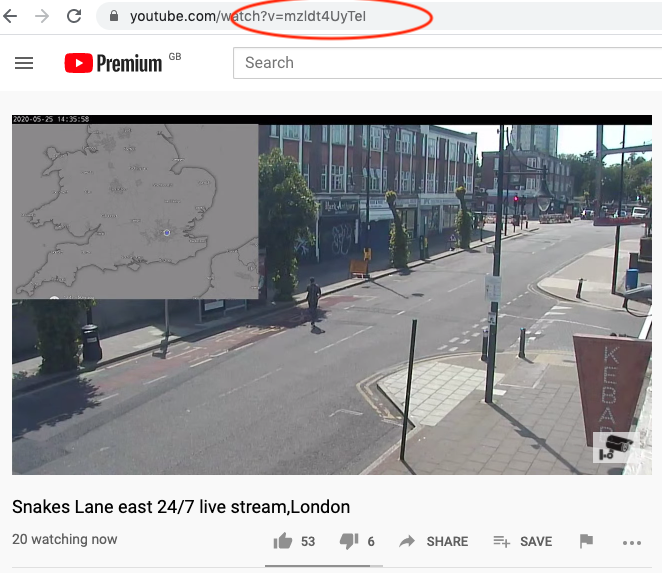

The self-explanatory nature of the social world Explanations are not only explicitly formulated, but are an inherent feature of the social world. Even without giving an explicit explanation, the design of an object provides ‘implicit’ explanations. Gibson (1979)’s concept of affordances, often used in system development, highlights that specific design features make specific actions relevant, e.g., a button that should be pressed, a lever that should be pulled (Norman, 1990). Situated social actions are also inherently recognisable (Levinson, 2013), even when mediated through AI such as in autonomous driving systems (Stayton, 2020). A slowly driving car will be recognised as not from the area (see, e.g., (Stayton, 2020)) and moving in certain ways can be recognised as, e.g., giving way to a pedestrian (Moore et al., 2019; Haddington and Rauniomaa, 2014). We could harness self-explanatory visibility (Nielsen, 1994) to design AI behaviours that are recognisable as specific social actions.

Miscommunication as explanation The practices of repair – the methods we use to recognise and deal with miscommunication (problems of speaking, hearing and understanding), as they occur in everyday interaction (Schegloff, Jefferson, and Sacks, 1977) – constitute pragmatic forms of explanation when they allow us to identify and resolve breakdowns of mutual understanding. For example, when someone says “huh?” in response to a ‘trouble source’ turn in spoken conversation, the speaker usually repeats the entire prior turn (Dingemanse, Torreira, and Enfield, 2013), whereas if the recipient had said “where?”, their response might only have solicited a repeat or reformulation of only the misheard place reference. These practices range from from tacit displays of uncertainty to explicit requests for clarification that solicit fully formed explanations and accounts (Raymond and Sidnell, 2019). These methods for real-time resolution of mutual (mis)understanding allows us, at least, to proceed with joint action in ways that establish and uphold explanation in action.

Explainability in action

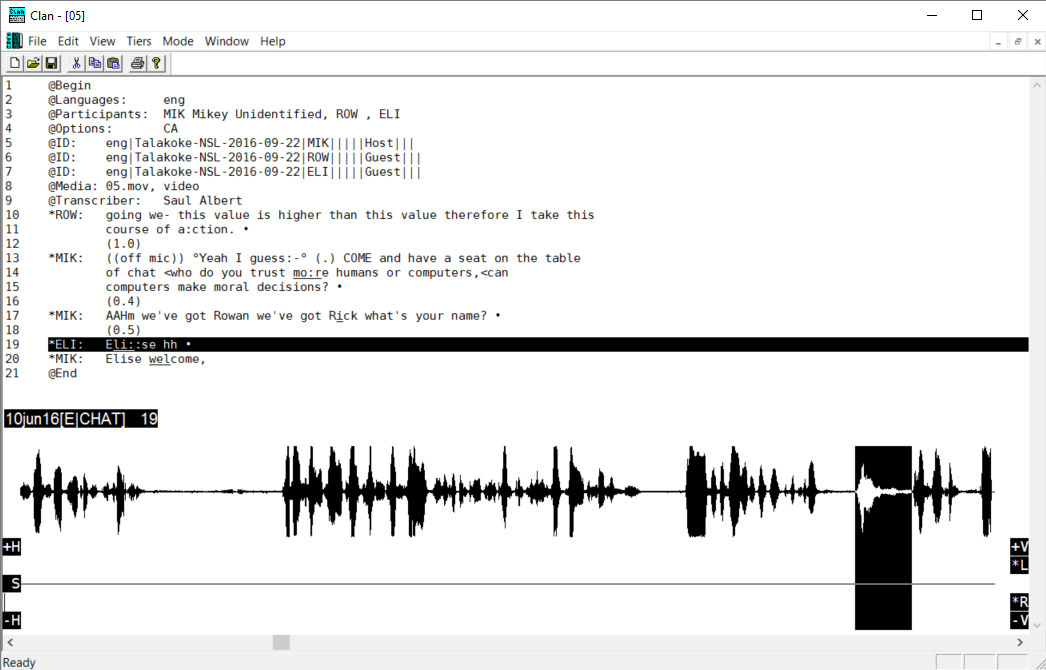

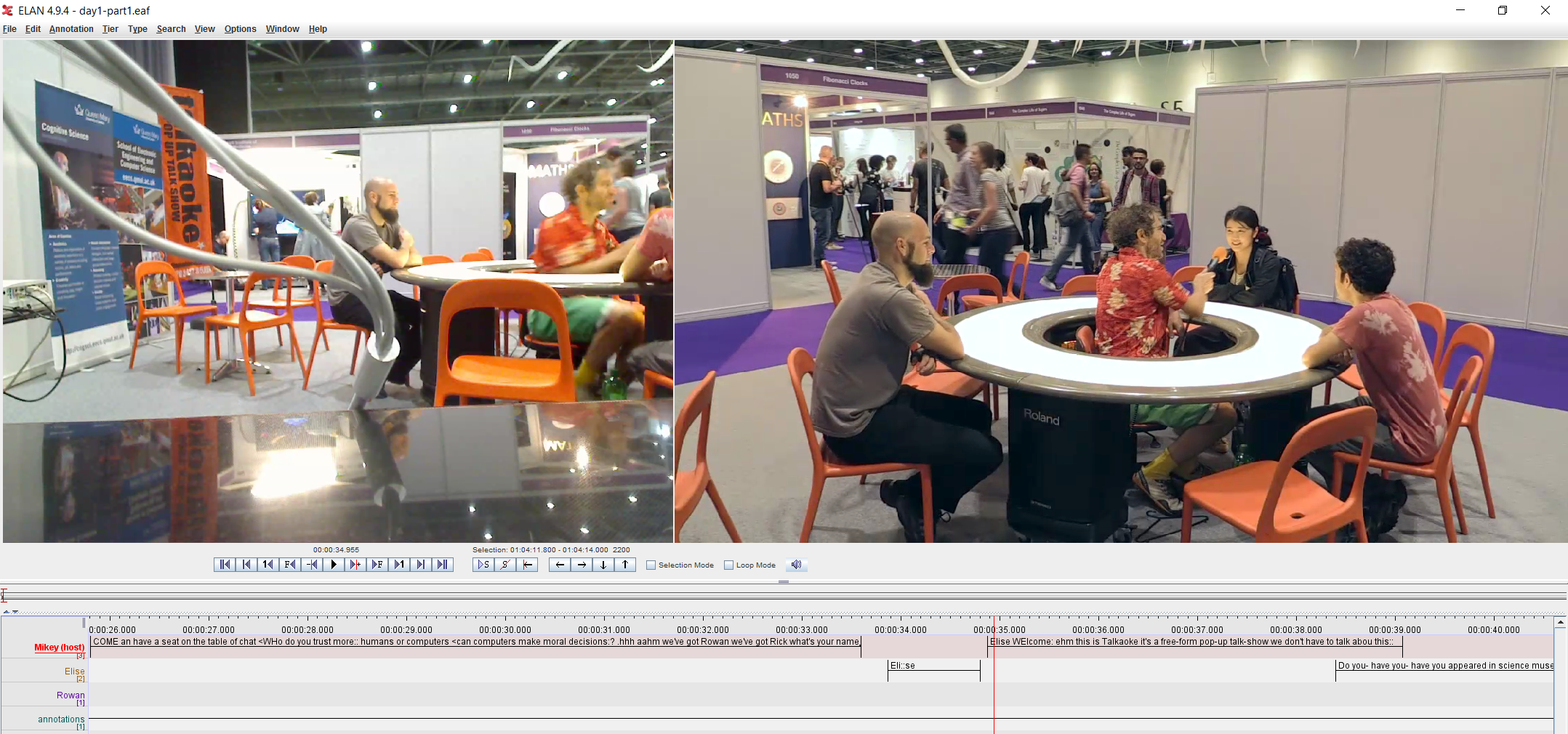

This paper will use Conversation Analysis to examine episodes of Human-AI interactions, from a wide range of everyday interactional settings, and involving different technologies, user groups, and task orientations. Rather than attempting to establish a systematic or generalisable metric for explainability across interactional settings, the aim here is to encourage an extension of – and critical reflections on – our technical conceptualisation of explanation in X-AI.

References

Antaki, C. (1994). Explaining and Arguing: The Social Organization of Accounts. Sage.

Antaki, Charles (1996). “Explanation slots as resources in interaction”. In: British Journal of Social Psychology 35, pp. 415–432. doi: 10.1111/j.2044-8309.1996.tb01105.x.

Antaki, Charles and Alexandra Kent (2012). “Telling people what to do (and, sometimes, why): Contingency, entitlement and explanation in staff requests to adults with intellectual impairments”. In: Journal of Pragmatics 44, pp. 876–889. doi: 10.1016/j.pragma.2012.03.014.

Button, Graham (2003). “Studies of work in Human-Computer Interaction”. In: HCI Models, Theories, and Frameworks. Toward a Multidisciplinary Science. Ed. by John M. Carroll. San Francisco, CA, USA: Morgan Kaufmann, pp. 357–380. doi: 10.1016/b978-155860808-5/50013-7.

Clark, Herbert H. (1996). Using Language. Cambridge, UK: Cambridge University Press. doi: 10.1017/CBO9780511620539.

Depperman, A., & Haugh, M. (Eds.). (2021). Action ascription in interaction. Cambridge University Press.

Di Paolo, Ezequiel A., Elena Clare Cuffari, and Hanne De Jaegher (2018). Linguistic Bodies The Continuity between Life and Language. Cambridge, MA, USA: The MIT Press. doi: 10.7551/mitpress/11244.001.0001.

Dingemanse, Mark, Francisco Torreira, and Nick J. Enfield (2013). “Is “huh?” a universal word? Conversational infrastruc- ture and the convergent evolution of linugistic items”. In: PLoS ONE 8, e13636. doi: 10.1371/journal.pone.0078273.

Edwards, Derek and Jonathan Potter (1992). Discursive Psychology. London, UK: Sage.

Ehsan, Upol et al. (2021). “Expanding explainability: Towards social Transparency in AI systems”. In: Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. Yokohama, Japan. doi: 10.1145/3411764.3445188.

Garfinkel, Harold (1967). Studies in Ethnomethodology. Englewood Cliffs, NJ, USA: Prentice Hall.

Garfinkel, Harold (2002). Ethnomethodology’s program: Working out Durkheim’s aphorism. Lanham, Boulder, New York, Oxford: Rowman & Littlefield Publishers.

Garfinkel, H. (2021). Ethnomethodological Misreading of Aron Gurwitsch on the Phenomenal Field. Human Studies, 44(1), 19–42. https://doi.org/10.1007/s10746-020-09566-z

Gibson, James J. (1979). The Ecological Approach to Visual Perception. New York, NY, USA: Psychology Press. doi:10.4324/9781315740218.

Gill, V. T., & Maynard, D. W. (2006). Explaining illness: Patients’ proposals and physicians’ responses. In D. W. Maynard & J. Heritage (Eds.), Communication in Medical Care: Interaction between Primary Care Physicians and Patients (pp. 115–150). Cambridge University Press. https://doi.org/10.1017/CBO9780511607172.007

Goodwin, Charles (2017). Co-Operative Action. New York, NY, USA: Cambridge University Press. doi: 10 . 1017 / 9781139016735.

Haddington, Pentti and Mirka Rauniomaa (2014). “Interaction between road users”. In: Space and Culture 17, pp. 176–190. doi: 10.1177/1206331213508498.

Heller, V. (2016). Meanings at hand: Coordinating semiotic resources in explaining mathematical terms in classroom discourse. Classroom Discourse, 7(3), 253–275. https://doi.org/10.1080/19463014.2016.1207551

Heritage, J. (1988). Explanations as accounts: A conversation analytic perspective. In C. Antaki (Ed.), Analysing Everyday Explanation: A Casebook of Methods (pp. 127–144). Sage Publications.

Heritage, John and Steven Clayman (2010). Talk in Action. Interactions, Identities, and Institutions. Chichester, UK: Wiley. doi: 10.1002/9781444318135.

Hindmarsh, J., Reynolds, P., & Dunne, S. (2011). Exhibiting understanding: The body in apprenticeship. Journal of Pragmatics, 43(2), 489–503. https://doi.org/10.1016/j.pragma.2009.09.008

Hutchins, Edwins (1995). Cognition in The Wild. Cambridge, MA, USA: The MIT Press.

Ingram, J., Nick Andrews, and Andrea Pitt (2019). “When students offer explanations without the teacher explicitly asking them to”. In: Educational Studies in Mathematics 101, pp. 51–66. doi: 10.1007/s10649-018-9873-9.

Lee, Yeji and Jakub Mlynář (2023). ““For example” formulations and the interactional work of exemplification”. In: Human Studies. doi: 10.1007/s10746-023-09665-7.

Levinson, Stephen C. (2013). “Action formation and ascription”. In: The Handbook of Conversation Analysis. Ed. by Jack Sidnell and Tanya Stivers. Chichester, UK: Wiley-Blackwell, pp. 101–130. doi: https://doi.org/10.1002/ 9781118325001.ch6.

Linell, P (2009). Rethinking language, mind, and world dialogically: Interactional and contextual theories of human sense- making. Charlotte: Information Age Publishing inc.

Miller, Tim (2019). “Explanation in Artificial Intelligence: Insights from the social sciences”. In: Artificial Intelligence 267, pp. 1–38. doi: 10.1016/j.artint.2018.07.007.

Moore, Dylan et al. (2019). “The Case for Implicit External Human-Machine Interfaces for Autonomous Vehicles”. In: Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications. Utrecht, The Netherlands, pp. 295–307. doi: 10.1145/3342197.3345320.

Nielsen, Jakob (1994). “Enhancing the explanatory power of usability heuristics”. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. Boston, MA, USA, pp. 152–158. doi: 10.1145/191666.191729.

Norman, Donald A. (1990). The Design of Everyday Things. New York, NY, USA: Basic Books.

Raymond, Geoffrey and Jack Sidnell (2019). “Interaction at the boundaries of a world known in common: initiating repair with “What do you nean?”” In: Research on Language and Social Interaction 52, pp. 177–192. doi: 10.1080/08351813. 2019.1608100.

Robinson, J. D. (2016). Accountability in Social Interaction. In J. D. Robinson (Ed.), Accountability in Social Interaction (pp. 1–44). Oxford University Press (OUP). https://doi.org/10.1093/acprof:oso/9780190210557.003.0001

Rohlfing, Katharina et al. (2021). “Explanation as a social practice: Toward a conceptual framework for the social design of AI systems”. In: IEEE Transactions on Cognitive and Developmental Systems 13, pp. 717–728. doi: 10.1109/TCDS. 2020.3044366.

Rudin, Cynthia (2019). “Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead”. In: Nature Machine Intelligence 1, pp. 206–215. doi: 10.1038/s42256-019-0048-x.

Sacks, Harvey, Emanuel A. Schegloff, and Gail Jefferson (1974). “A simplest systematics for the organization of turn-taking for conversation”. In: Language 50, pp. 696–735.

Schegloff, Emanuel A. (1997). “Whose Text? Whose Context?” In: Discourse & Society 8, pp. 165–187. doi: 10.1177/ 0957926597008002002.

Schegloff, Emanuel A., Gail Jefferson, and Harvey Sacks (1977). “The preference for self-correction in the organization of repair in conversation”. In: Language 53, pp. 361–382.

Sidnell, J. (2012). Declaratives, Questioning, Defeasibility. Research on Language & Social Interaction, 45(1), 53–60. https://doi.org/10.1080/08351813.2012.646686

Stayton, Erik Lee (2020). “Humanizing Autonomy: Social scientists’ and engineers’ futures for robotic cars”. PhD thesis.

Cambridge, MA, USA: Massachusetts Institute of Technology. doi: 1721.1/129050.

Suchman, Lucy A. (1987). Plans and Situated Actions: The Problem of Human-Machine Communication. Cambridge, UK: Cambridge University Press.

Wiggins, Sally (2016). Discursive Psychology. Theory, Method and Applications. Sage. doi: 10.4135/9781473983335.

∗Corresponding author: s.b.albert@lboro.ac.uk. Order of authors is alphabetical.

†SA, MH: Loughborough University; HB: Bielefeld University; KC: University of Duisburg-Essen; CE: Ruprecht Karl University of Heidelberg; JM:HES-SO Valais-Wallis; HP: Linköping University; MP: Swansea University; SR: University of Nottingham; PS: University of Lausanne; ST: King’s College

What ‘counts’ as explanation in social interaction? Read More »