This scenario is designed to elicit and capture conversation between a group of people who are watching a specific episode of Dr. Who together.

This scenario is designed to elicit and capture conversation between a group of people who are watching a specific episode of Dr. Who together.

The aim is to be able to compare existing formal metadata for this episode with this speculative ‘conversational metadata’, and evaluate it as an alternative representation of the same media object: Dr Who, Season 4, Episode 1, Partners in Crime.

The Setup

Two groups of eight people are invited to watch of an episode of Dr Who together on a large screen, during which they use their laptops and a simple text/image/video annotation interface to type short messages or send images onto the screen where they are visible as an overlay on top of the video of Dr Who.

The room is laid out in a ‘living room’ arrangement to support co-present viewing and interaction between participants, with comfortable seating arranged in a broad semi-circle, oriented towards a large projected video screen about ten feet away. Each participant is asked to bring their own laptop, tablet PC, or other wifi-enabled device with a web browser.

After making sure that all participants are on the network, there is an introductory briefing where they are given a presentation explaining the aims of the project and that they are free to walk around, use their laptops or just talk, and help themselves to food and drink during the screening.

The Annotation Tool

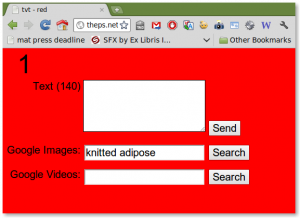

The system that the participants are using on their laptops/tablets or mobile phones has a simple web-based client, enabling viewers to choose a colour to identify themselves on the screen, and then type in 140 characters of text or search for images and video, before sending them to the main screen.

The Display Screen

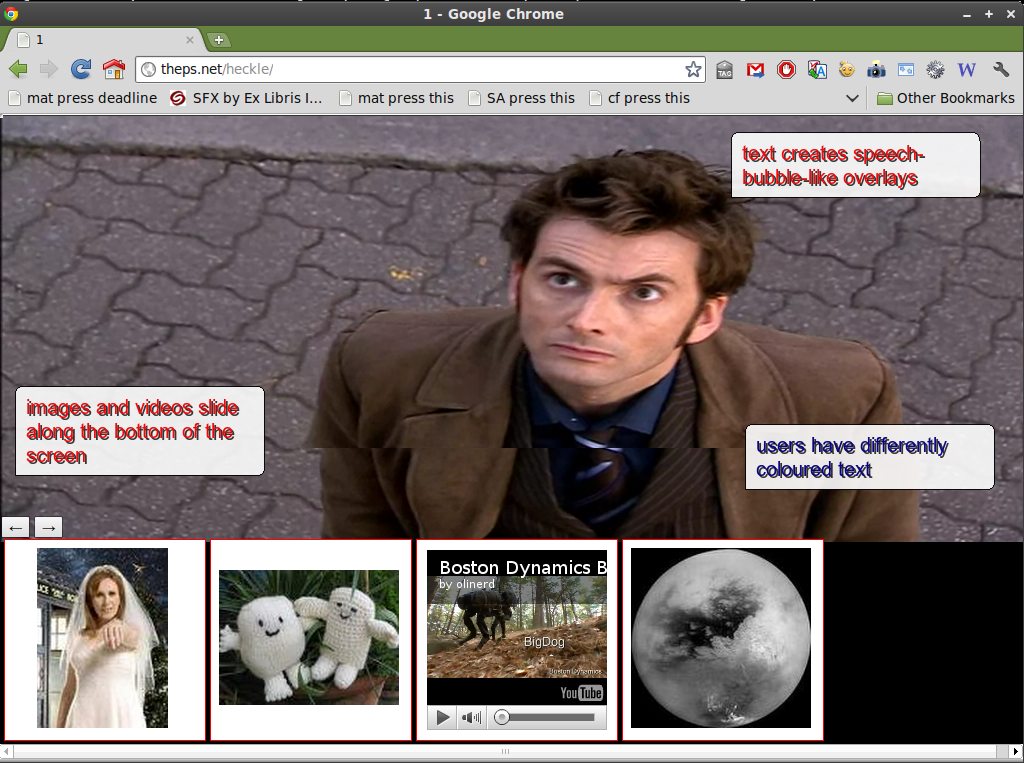

The video of Dr Who is projected on a ‘main’ screen, alongside text, images and video clips sent by viewers in a fullscreen browser window. The images and videos sent by users have a coloured outline, and text-bubbles are coloured to indicate who posted them.

Images and videos run underneath the video in a ‘media bar’, while text bubbles posted by users drop onto the screen in random positions, but can be re-arranged on the screen or deleted by a ‘facilitator’.

Rationale

This ‘conversational scenario’ is a hybrid of various methods in which researchers have contrived situations to elicit data from participants. Before making any claims about the data gathered, some clarification of the purpose and methods of the scenario are necessary.

Ethnographic Studies of Social TV have tended to use audiovisual recordings of TV viewers in naturalistic settings as their primary source, and analytical methods such as Conversation Analysis and participant observation have been used to deepen their understanding of how people use existing TV devices and infrastructures in a social context.

HCI approaches to designing Social TV systems have built novel systems and undertaken user testing and competitive analysis of existing systems in order to better understand the relationship between people’s social behaviours around TV, and the heuristics of speculative Social TV{{1}} devices and services.

Semantic Web researchers have opportunistically found ways to ‘harvest’ and analyse communications activity from the Social Web, as well as new Social TV network services that track users’ TV viewing activity as a basis for content recommendations and social communication.

All of these approaches will be extremely useful in developing better conversational annotation systems, and improving understanding and design of Social TV for usability, and for making better recommendations.

Although the conversational scenario described borrows from each of these methods, it’s primary objective is to gather data from people’s mediated conversations had around a TV in order to build a case for seeing and using it as metadata.

System design, usability, viewer behaviour, user profiles, choices of video material, and the effect those issues have on the quality and nature of the captured metadata are a secondary concern to this first step in ascertaining whether conversations can be captured and treated as metadata pertaining to the video in the first place.

[[1]]I am using the term Social TV, following one of the earliest papers to coin the phrase by Oehlberg et. al (2006) to refer to Interactive TV systems that concentrate on the opportunities for viewer-to-viewer interaction afforded by the convergence of telecoms and broadcast infrastructures. Oehlberg, L., Ducheneaut, N., Thornton, J. D., Moore, R. J., & Nickell, E. (2006). Social TV: Designing for distributed, sociable television viewing. Proc. EuroITV (Vol. 2006, pp. 25–26). Retrieved from http://best.berkeley.edu/~lora/Publications/SocialTV_EuroITV06.pdf [[1]]