The pragmatics of showing off your new artwork to friends

In Anita Pomerantz’s canonical paper on preference for agreement and disagreement with assessments in conversation I found two fascinating examples of precisely the phenomena I was looking for in the ways people talk about art.

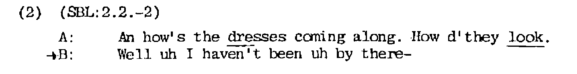

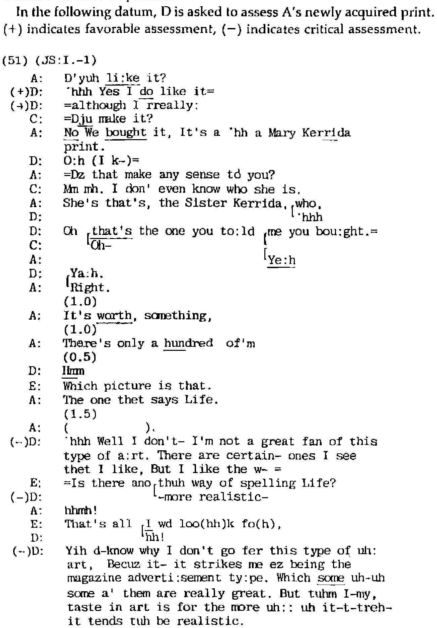

Pomerantz uses the example below to demonstrate what she calls ‘second-assessment

productions: agreement dispreferred’, by which she means that when someone

produces a self-deprecating assessment in conversation, it invites an agreement

or a disagreement with that self-deprecation – but generally a disagreement is

preferred.

As is often the case with this kind of conversational analysis, the evidence

for one action or response being preferred is found by observing what happens

when the ‘dispreferred’ action or response is supplied. In Pomerantz’s

examples of assessments, ‘dispreferred’ conversational responses, often

contradictions and disagreements, are characterised by delays, pauses, and

other avoidances or ‘softenings’ of the dispreferred response. It is in this

context that Pomerantz produces the example below, as evidence for structure of

how conversational participants tend to withhold what she calls ‘coparticipant

criticism’ – basically, worming their way around insulting each other’s

sensibilities.

However, this extract also shows some of the features of aesthetic

conversations that I intuitively shaped into my research question about how the

criteria for an assessment become relevant referents for sub-asessements in an

aesthetic evaluation.

Pomerantz identifies this entire exchange only as a series of deferrment turns,

in which D’s (dispreferred) critical assessment is delayed, softened or

otherwise minimised.

With my question about the criteria for judgement being negotiated in mind,

these exchanges seem to support the idea that a feature of these deferrments of

dispreferred responses is that they include a negotiation of the referents of

the assessments they precede:

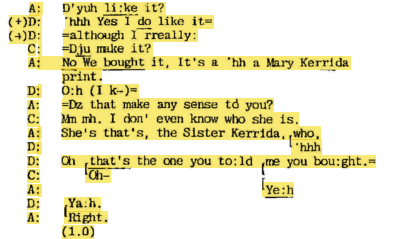

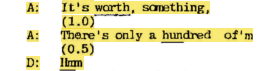

In the exchange highlighted above, A offers up a print for assessment, D gives

a positive assessment (softening an immanent critical assessment, Pomerantz

suggests), and C interrupts, identifying one criterion for judgement: the

question of authorship. A, C and D then negotiate claims to knowledge of this

referent (the author of the print).

Two further criteria are then raised by A – the assessment that the print is

monetarily valuable, which is met with a silence (which Pomerantz sees as an

implicit marker for a dispreferred second assessment – in this case, a

disagreement). A then raises the rarity of the print “only a hundred of’m”,

which D acknowledges after with ‘Hrm’ after a further pause.

Pomerantz observes in this paper that acknowledgements of prior assessments do

not imply claims of access to a referent: by acknowledging A’s claim of the

rarity and value of the piece without agreeing or disagreeing, D acknowledges

only the claim, but (marked by a further pause) does not participate in

claiming to have that knowledge.

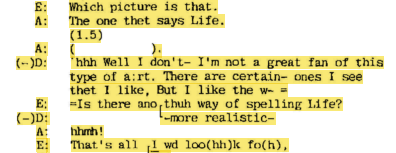

E then requests a clarification of the referent being assessed: “Which picture

is that.”, then interrupts D’s negative assessment, seemingly to raise a

further criterion for assessment of the print: the spelling of the word ‘Life’

in the print that A points out. E then seems to claim that “That’s all I wd

loo(hh)k fo(h)” in the print.

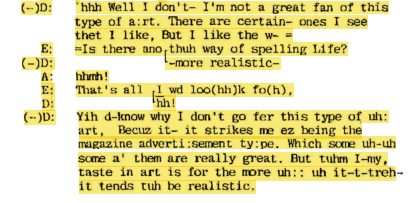

Finally, D delivers a critical assessment, raising several further criteria:

- that this print belongs to a type of art that can be more or less

‘realistic’, and that D assesses this to be ‘less realistic’. This criterion is coupled with an assessment that D likes the ‘more realistic’ of this ‘type of art’ - That the print belongs to a “magazine advertisement” type, which can be

more or less “great”, and D implys an assessment that this print is less great.

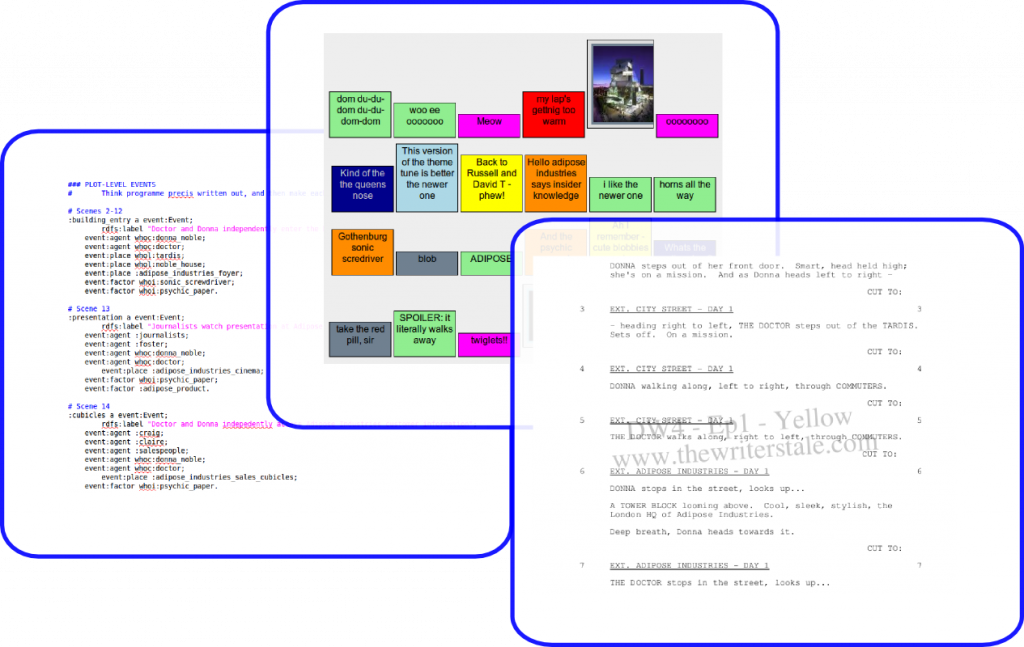

I am provisionally thinking of aesthetic judgements as assessment sequences in

cases where the referent of the assessment is evidently ambiguous.

Although Pomerantz is only concerned with the overall structures of preference

in agreement and disagreement with assessments, this extract does seem to bear

out some of the assumptions in my research question: namely that in aesthetic

judgements, conversational participants seem to offer up candidates for

assessment criteria along with their assessments.

Other examples of assessment sequences in Pomerantz’s text that deal with more

self-evident referents do not seem to exhibit this characteristic, suggesting

that it may be useful to look at aesthetic judgements as a special case of

conversational interaction.

Another assumption in my research question is that as conversational

participants offer up candidate criteria as referents for their assessments,

the opportunity for ‘topical drift’ is extended. The discussion of one referent

may lead to another, and yet another, potentially replacing the subject of an

initial assessment with a sequence of second and third assessments of different

referents altogether.

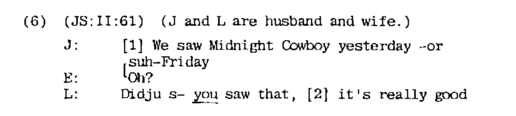

In a note on a section about ‘upgrades’ (described in her paper as strong

agreements with assessments on sequential grounds), Pomerantz picks out an

exception to the upgrades she finds in the corpus that reinforce prior

assessments of the same referent: upgrades that also slightly modify the

referent, and then reinforce it:

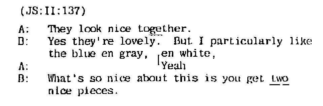

In this extract A assesses as ‘nice’ the way two things appear together. B

upgrades the assessment to ‘lovely’, but generalises the referent to the two

things – not their appearance together.

Pomerantz identifies this topic shift as part of a softening of a later

dispreferred disagreement with an assessment: B eventually emphasises the

niceness being that the two pieces are separate – contradicting A’s initial

assesment.

Once again, in the interim between assessment and dispreferred disagreement, B

offers up the colours of the pieces (“blue en grey, en white”) as candidate

criteria for assessment, which A agrees with in this sequence.

Again, this seems to bear out some of the intuitions in my research question –

not only that a component of the conversational pragmatics of aesthetic

judgements is the proffering of multiple criteria for assessment, but also that

in this process, there is an opportunity for a shift in the referent being

assessed.

The qualification for these intitutions that I can take from Pomerantz’s paper

is that this proffering of alternate candidate criteria may be seen as a

specific case of deferring or delaying a dispreferred second assessment in a

sequence.

The pragmatics of showing off your new artwork to friends Read More »