‘Blame’ and ‘dispreference’ in aesthetics and conversation analysis

I’ve started my literature review with Anita Pomerantz’s “Agreeing

and disagreeing with assessments: some features of preferred/dispreferred turn shapes” to try and get a flavour of how ‘judgements of taste’ might look

through the lens of conversation analysis (CA).

Pomerantz begins her paper with the assertion that assessments are a

fundamental conversational feature of participation in an activity. She finds

evidence for this claim in the way that people decline to make assessments of things they haven’t participated in.

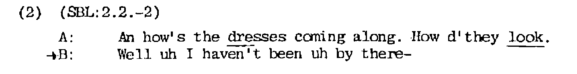

In the example below, this claim is demonstrated by B declining to deliver an expected assessment of the “dresses” by claiming lack of access to them: “I haven’t been uh by there-“.

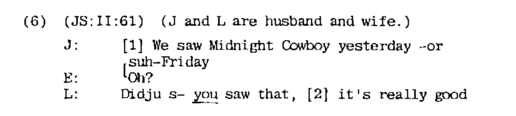

Pomerantz then points out that a statement of having participated in something is somehow incomplete without a correlating assessment.

The above example intuitively bears this out: if L’s completion of this

sequence with “it’s really good” is missed out, the conversation would seem

stilted.

Reading Pomerantz’ detailed, CA account of the coordination of assessment patterns in speech alongside Kant and Hume’s philosophical

discourses on aesthetics and judgement offers some compelling but potentially incompatible insights.

Pomerantz’s observations of how preference structures work in assessments, namely that there are ‘preferred’ and ‘dispreferred’ responses to assessments

seems to map onto the way Kant uses ‘blame’ in judgements of taste.

Kant’s critique of aesthetic judgement asserts that an aesthetic judgement is

distinctive because we ‘blame’ others for not agreeing with us. Kant uses this

as a way of differentiating between judgements of the ‘agreeable’ and

judgements of taste: when something is ‘agreeable’ (such as, for

example, a cute puppy), we don’t argue the point with people who don’t like

dogs because, even when most people seem to find them agreeable, not everyone

has to like all kinds of puppies. However, when discussing aesthetic

judgements, we argue the point. For Kant, that willingness to argue marks out

an aesthetic judgement from other forms of judgement: a judgement that is (at

least potentially, in the mind of the judger) a universal judgement.

Pomerantz bases her claim that there are ‘preferred’ and ‘dispreferred’

responses to assessments on the regularly observable structures of how people

negotiate assessments in everyday conversation.

She identifies preferences for ‘second’ assessments that affirm their prior

assessments (or negate them, in the case of self-deprecations). She describes

what she calls a ‘preferred-action turn shape’: marked by immediate response,

lack of explanation, delay or requests for repetitions of clarifications.

By contrast, she demonstrates ‘dispreferred-action turn shapes’ being

consistently marked by conversational phenomena such as pauses, explanations,

laughter and seeming agreements: ‘Yes but… no but…’, (to borrow from Vicky Pollard), softening a contradiction (or the ‘dispreferred’ affirmation of a self-deprecation).

Could Kant’s idea of ‘blame’, the expectation or demand that others should

agree with us be equated in some way to the idea of a ‘preferred’ and a

‘dispreferred’ assessment? The problem with mapping an essentialist idea onto a phenomenological framework like CA is that everything starts to look like either a chicken or an egg. Are the conversational phenomena products of some underlying rule, or are Kant’s observations of cases in which we ‘blame’ each other for disagreement and argue the point based on observation of certain degrees of dispreference in talk?

This may point to a fundamental problem in how I am constructing my question: philosophical discourses such as Kant’s and Hume’s may simply be incompatible with analyses based on the phenomena of dialogue. I suspect I need to read a lot more philosophy and conversation analysis to sharpen my questions up to the point that these very different kinds of source materials can be brought into play in a useful way.

‘Blame’ and ‘dispreference’ in aesthetics and conversation analysis Read More »